Singapore Airshow – Smart AI Cockpit: ST Engineering’s digital crew member for the battlefield

At the Singapore Airshow 2026, ST Engineering’s showcased its AI Cockpit, which represents a game‑changing evolution in the way crews interact with combat vehicles, whether infantry fighting vehicles, unmanned ground systems or multi‑platform systems operating in a multi‑domain environment. Designed from the outset as a true combat assistant, it fits squarely within the “human‑machine teaming” concept and aims to transform the cockpit into a genuine decision centre, enhanced by artificial intelligence

At the heart of the concept, the AI Cockpit acts as a voice‑controlled combat assistant, able to understand natural‑language commands, deliver critical information and propose tactical options at a pace aligned with modern operations. Integrated on platforms such as the Terrex s5 HED 8×8 and the Taurus UGV, it becomes the key interface between the crew and the ecosystem of sensors, weapons and drones operating in swarms within architectures such as the Manned‑Unmanned Teaming Operating System (MUMTOS). In practice, the AI Cockpit streamlines the observe–orient–decide–act loop by filtering data flows and highlighting what matters most for the mission: priority threats, routes of advance, firing windows, and risks of fratricide or exposure.

From a functional standpoint, the AI Cockpit combines several building blocks: robust speech recognition and synthesis in noisy combat environments, AI‑driven decision engines, real‑time analytics, and a multimodal human‑machine interface (displays, alerts, voice feedback). The assistant relies on a layer of “Physical AI” under development across the group, intended to give physical systems – vehicles, robots, drones – coherent capabilities in perception, reasoning and coordinated action, underpinned by a major multi‑year AI investment. This architecture is designed to be sensor‑ and effector‑agnostic: it can ingest data from radars, optronics, LIDAR, RF sensors and even chemical detectors such as eNose, feeding models that detect, classify and prioritise tactical events before turning them into alerts or recommendations for the crew.

One of the AI Cockpit’s key advantages lies in how it reduces crew cognitive load, a critical challenge for operators of highly digitised vehicles in data‑saturated environments. The voice assistant allows commanders, drivers and gunners to trigger key functions – changing driving modes, prioritising targets, reconfiguring sensors, requesting a tactical picture – without taking their hands off the controls or navigating complex menus. In parallel, the AI filters and fuses video and telemetry streams, much like the tools developed for UAS systems such as DroNet, which convert raw feeds into actionable insights so that only mission‑critical information is displayed, supporting both survivability and effectiveness. Information is then prioritised according to context: in contact, threat alerts are brought to the fore, while during transit or logistical phases, navigation, predictive maintenance and synchronisation with the wider force take precedence.

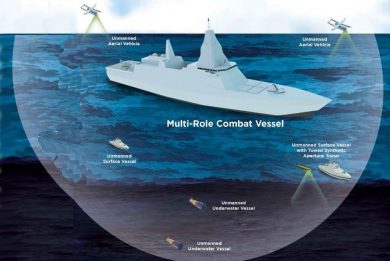

Designed to plug into a broader command‑and‑control architecture, the AI Cockpit is tightly coupled with the MUMTOS, an AI‑enabled C2 platform orchestrating the combined action of manned vehicles, UGVs, UAS and other autonomous systems. In this construct, the cockpit no longer controls only the host vehicle, but also serves as a console for tasking, supervising and reconfiguring reconnaissance or support drones directly through voice commands or simplified interactions. The AI thus contributes to swarm management, spatial and spectral deconfliction, and the prioritisation of sensors and data links according to the tactical situation, while upholding cybersecurity and communications resilience as design imperatives.

Despite the growing level of automation, ST Engineering emphasises that the human remains central in the decision loop, with the AI Cockpit conceived as an aid, not a replacement, to command judgement. Algorithms are trained on scenarios generated at scale by AI‑powered rapid scenario generation tools, which use generative AI to create varied and realistic training environments and to test and refine AI behaviours in extreme or rare situations. The same scenario‑generation capability is used to train crews, enabling them to learn how to interact with the assistant, understand its limitations and failure modes, and maintain the ability to take back full manual control instantly in degraded conditions. The goal is to strike a balance between operational performance, explainability of AI recommendations and robustness against jamming, sensor loss or cyberattack.

While the AI Cockpit is currently highlighted on land platforms such as the Terrex s5 HED and the Taurus UGV, the underlying technology is designed to be transferrable to other domains, notably air and sea, building on ST Engineering’s experience in digital cockpit upgrades and avionics integration. The progressive integration of additional capabilities – more advanced natural‑language understanding, more compact embedded models, tighter coupling with predictive maintenance and fleet‑management systems – points towards a truly cognitive cockpit, able to anticipate crew needs rather than simply responding to commands. Ultimately, the AI Cockpit fits into a wider vision of an augmented combat platform, where every vehicle, robot or drone contributes to a distributed cognitive network, while still offering its crew a unified, coherent interface firmly oriented towards decision superiority.

Photos by J. Roukoz